After a decade and a half in the IT industry, it’s pretty clear to me that a few clichés do indeed ring true. First, “change” really is the only constant, and second, the pace of that change only continues to accelerate. This is why “IT complexity” seems to be at the very top of the buzzword charts in articles from just about every industry in our modern day economy.

This got me thinking, “Is it really harder today than it was back at the turn of the century”? I mean, hasn’t IT always been complex, and hasn’t change always been difficult to manage? The answer, of course, is yes. However, modern day IT is experiencing multiple shifts in business strategy and technology that are unprecedented.

To set the stage, here are a few quick primer notes:

- Most companies are still in a state of digital transformation. The Internet has created new business models and innovative methods for customer and partner engagement (try to imagine Amazon without customer reviews), but that evolution is ongoing.

- In order to compete in the digital world (which is now dominated by rich web and mobile applications), businesses must move faster than ever. The digital business era has become synonymous with speed and

- In response to this requisite, businesses have started to adopt new IT philosophies like DevOps, which seeks to apply the principles and benefits of agile software development to IT services (e.g. fast time-to-market, continuous delivery).

This “need for speed” has caused the technology industry to evolve in kind: Virtualization and software-defined infrastructure are about IT having the flexibility to optimize resource utilization, and to dynamically, systematically allocate, orchestrate and repurpose resources to meet new and changing application needs.

Hardware has become converged (combining server, storage and networking resources with management software). Public and private cloud offerings now enable companies, or 3rd parties, to build, test, deploy and even maintain new applications on the fly.

This is where the complexity shift has really ramped up. The days of a company’s applications all running exclusively within the walls of their own data center, on pure physical hardware, are long gone. Today, an application might run in an owned data center, in the public cloud, or a combination of both (hybrid IT), and it likely has some component that is virtual or even ephemeral. Infrastructure has gone from static to dynamic; from something you could see and touch to a state where you may not even know where application components are running. Not to mention, just because there are new technologies, it doesn’t mean that IT Operations can forget about or neglect the legacy systems that are still running critical functions (bimodal IT).

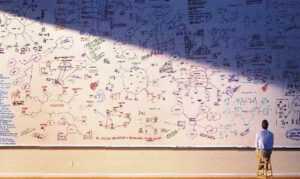

The complexity and rate-of-change of the modern IT landscape really has advanced to a state which makes it impossible to visualize, understand or make decisions about without the help of automation. This is a (relatively) new phenomenon, and it does change the way IT needs to approach their day-to-day jobs.

So what’s the next step? Change isn’t going to decelerate, and IT Operations is still on the hook for availability. For performance. For agility. For SLAs.

Maybe it’s time to adopt infrastructure monitoring that’s built for today’s modern IT applications and services…then again, I’m a little biased, since I work for a company that provides exactly that.